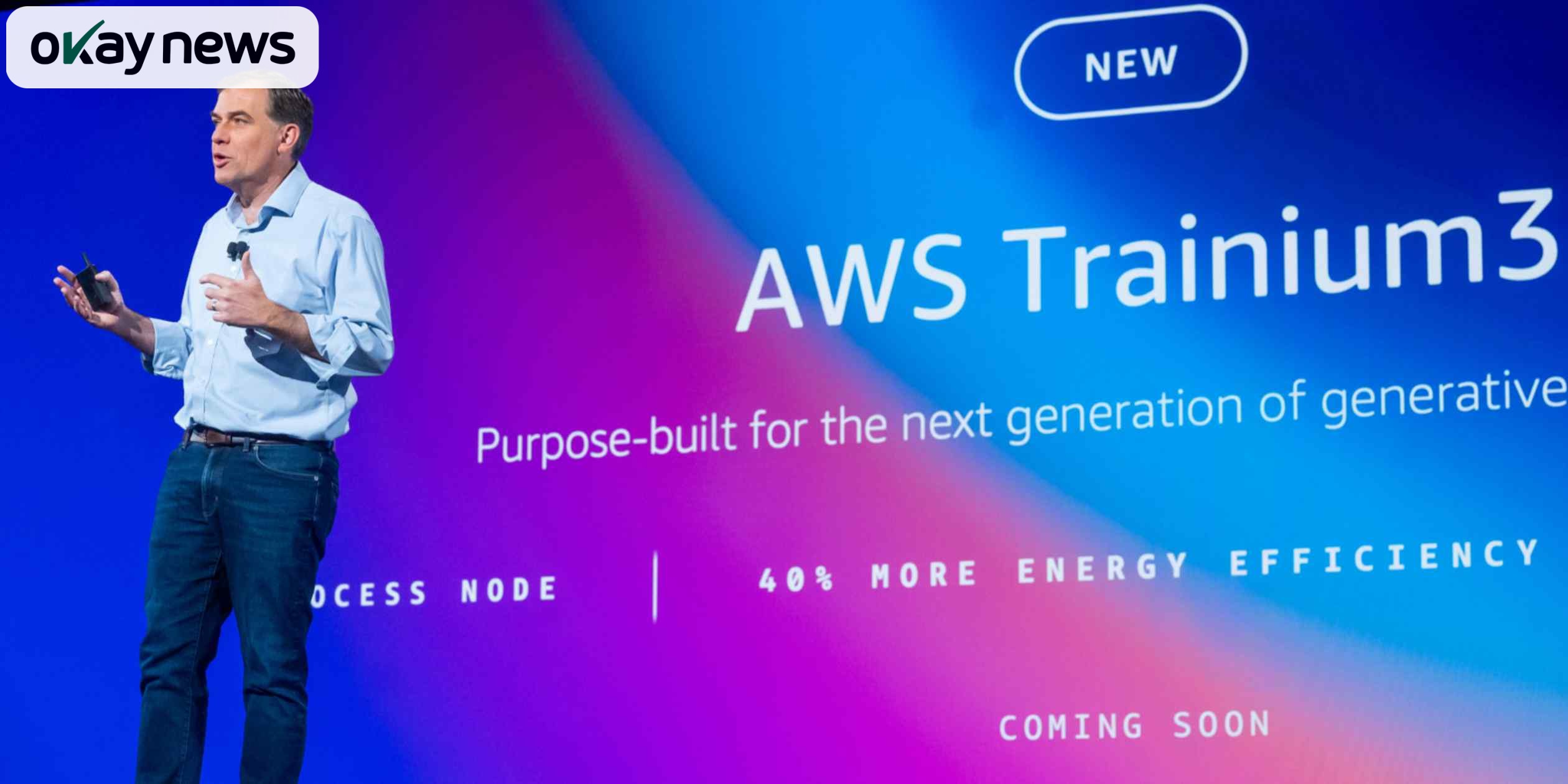

Amazon Web Services has released its latest in-house AI training chip, Trainium3, marking another major step in the company’s push to build its own high-performance, cost-efficient silicon for large-scale AI workloads. The announcement was made Tuesday at AWS re:Invent 2025 in Las Vegas.

AWS also teased the next chip on its roadmap — Trainium4 — which will support Nvidia’s NVLink Fusion interconnect, allowing AWS hardware to link directly with Nvidia GPUs.

AWS launched the Trainium3 UltraServer, a system built around its new 3-nanometer Trainium3 chips and Amazon-designed networking hardware.

The company says the system delivers more than 4× the performance and 4× the memory of the previous generation — improvements that apply to both AI training and inference. Thousands of these UltraServers can be interconnected to form clusters with up to 1 million Trainium3 chips, 10× the scale of Trainium2 deployments. Each UltraServer houses 144 chips.

AWS also emphasized better efficiency: Trainium3 reportedly uses 40% less energy than the previous generation, a benefit for both AWS’s massive data center footprint and customer costs.

Major AWS customers like Anthropic, Karakuri, SplashMusic, and Decart have already been running workloads on Trainium3 and reported substantial reductions in inference costs, AWS said.

AWS offered an early look at Trainium4, confirming that the next-generation chip is already in development. The chip will support Nvidia’s NVLink Fusion, allowing AWS systems to work alongside Nvidia GPUs — a key move as Nvidia remains the industry standard for advanced AI workloads.

The interoperability aims to make AWS more attractive to companies building directly on Nvidia’s CUDA-based ecosystem.

AWS did not provide a release date for Trainium4 but hinted that more details would likely surface at re:Invent 2026.